Machine Learning for Audio Analysis

I’m passionate about machine listening—using machine learning to analyze audio data—with a focus on bioacoustics and biodiversity monitoring. Click on the animal icons above to explore the types of sounds that I study. Clicking multiple icons creates a "soundscape," a rich blend of sounds that reveals information about the animals present.

Soundscapes hold valuable insights that can be automatically extracted using machine listening methods. My work addresses two key challenges:

- The need for robust models that perform well even with limited labeled data (Martinsson et al., 2022).

- The labor-intensive process of labeling bioacoustic data, which I aim to make more efficient and accurate (Martinsson et al., 2024), (Martinsson et al., 2025).

I strive to develop tools that not only advance bioacoustic analysis but also offer broader applications for other high-dimensional data domains (Martinsson, 2024).

Want to learn more? Explore my blog or publications for insights into machine listening, bioacoustics, and more.

Machine Listening for Bioacoustics

My core motivation is the potential of machine listening to automate the sensing and monitoring of natural environments, especially for tracking animal populations.

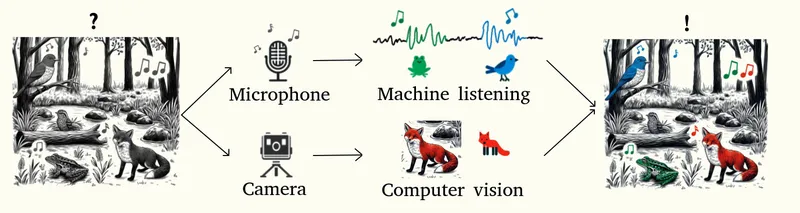

As shown above, sound often complements visual data, providing a fuller understanding of ecosystems. For instance, cameras may capture larger animals like foxes, while microphones detect acoustically active species like birds and frogs. By combining these data sources, we can monitor ecosystems more comprehensively.

Automated Species Detection and Biodiversity Estimation

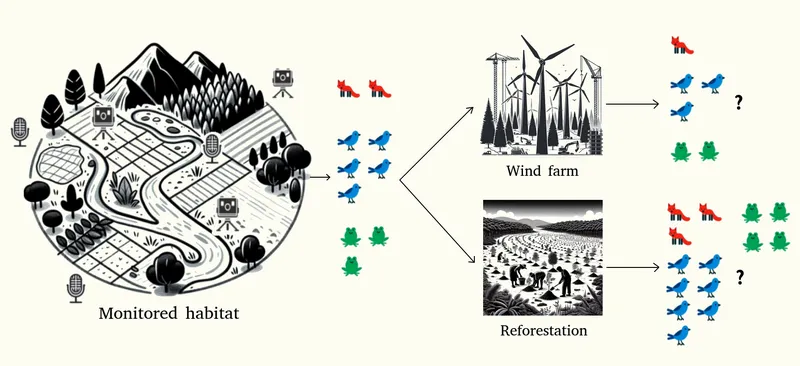

My goal is to develop machine listening methods that enable automated species detection and biodiversity estimation. Continuous habitat monitoring using acoustic and visual sensors can reveal how developments like wind farms or reforestation impact ecosystems.

By creating machine listening tools for species detection and classification, we can unlock insights into biodiversity trends, supporting conservation efforts and guiding ecological interventions.

AI-Generated Podcast on Machine Listening for Bioacoustics

This AI-generated episode is an easy listen and offers a glimpse into my vision for machine listening in bioacoustics, though some claims are exaggerated. It was generated by prompting NotebookLM with my webpage.

Affiliations and Collaborations

I am a PhD student at RISE, part of the Deep Learning Group, and affiliated with Lund University. I am also a core member of Climate AI Nordics. My supervisors are Olof Mogren and Maria Sandsten. Recently I have collaborated with Tuomas Virtanen from Tampere University, following a research visit at the Audio Research Group.

Current Bioacoustics Collaborations

- AukLab-Audio: Acoustic Monitoring of Seabirds in the Baltic Sea together with: Jonas Hentati Sundberg, Delia Fano Yela, and Olof Mogren

Do not hesitate to contact me if you are interested in collaborations. Whether you’re a machine learning researcher, ecologist, or policymaker, I’d love to discuss ideas for advancing machine listening and biodiversity monitoring.

References

- Martinsson, John and Mogren, Olof and Sandsten, Maria and Virtanen, Tuomas (2024). From Weak to Strong Sound Event Labels using Adaptive Change-Point Detection and Active Learning. In 2024 32nd European Signal Processing Conference (EUSIPCO) (pp. 902-906). . DOI: 10.23919/EUSIPCO63174.2024.10715098.

- Martinsson, John and Virtanen, Tuomas and Sandsten, Maria and Mogren, Olof (2025). The Accuracy Cost of Weakness: A Theoretical Analysis of Fixed-Segment Weak Labeling for Events in Time. Transactions on Machine Learning Research, (), . DOI: .

- Martinsson, John and Willbo, Martin and Pirinen, Aleksis and Mogren, Olof and Sandsten, Maria (2022). Few-Shot Bioacoustic Event Detection Using an Event-Length Adapted Ensemble of Prototypical Networks. In Detection and Classification of Acoustic Scenes and Events 2022 (pp. ). . DOI: .

- Martinsson, John (2024). Efficient and precise annotation of local structures in data. Centre for Mathematical Sciences, Lund University. ISBN: 978-91-8104-199-6.