Acoustic Monitoring of Guillemots at Stora Karlsö

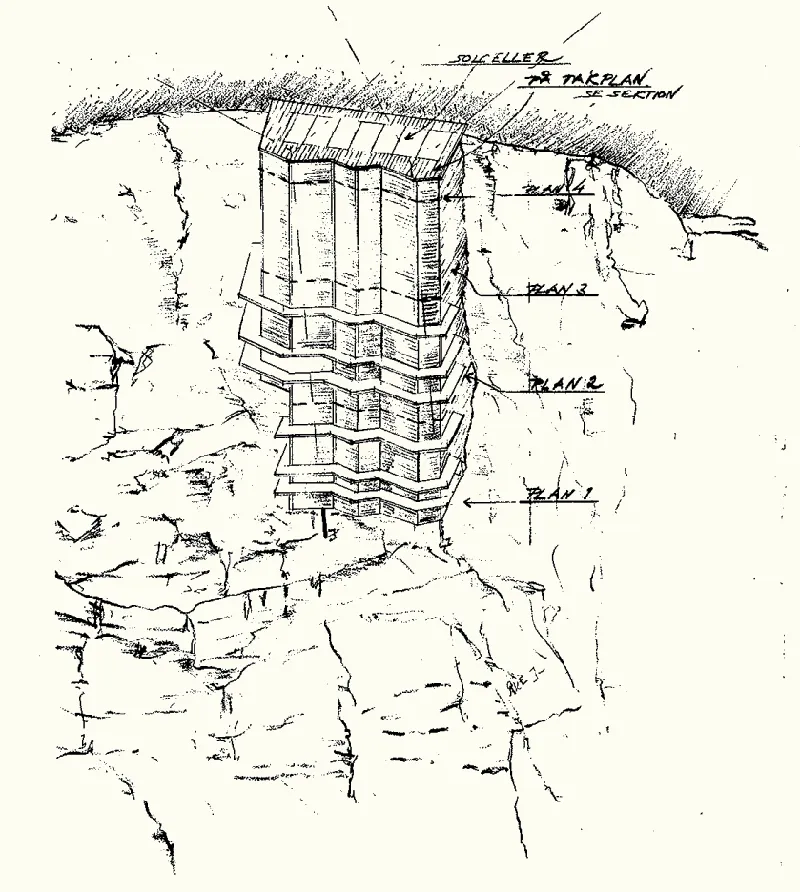

Seabirds like Guillemots offer valuable clues about marine conditions. By studying their calls and behaviors, we hope to learn more about ocean ecosystems. At Stora Karlsö there exists an artificial cliff where researchers have studied these birds for years. Imagine inverting the concept of a birdhouse, where the researchers reside inside the house and peek out at the birds that live on the ledges outside. This is the AukLab. Researchers at the AukLab have studied the Common Guillemot in this way for years, and in addition they have sensors such as cameras and weight scales to continuously collect data that describe these bird's daily lives. This year an audio team consisting of Delia Fano Yela, Olof Mogren and I have installed an acoustic monitoring setup on the island, as a result of some generous funding secured by Jonas Hentati Sundberg and Henrik Österblom. Acoustics is an important part of how animals communicate and how we as humans understand and interpret the world. Therefore, by adding continuous audio recordings to the AukLab we aim to gain an even richer view of Common Guillemot life.

Our goal is to capture Guillemot calls throughout the entire season, then align these with existing data streams, in particular video. More concretely we will (among other things) explore if we can link visual observations of fish arrival in the video streams with any corresponding acoustic events in the audio stream. Detecting fish arrival is important to understand the health of the colony, and if we are successful we may be able to complement the existing video data by detecting fish arrivals even if they are not visible in the cameras. We therefore need reliable time-stamped data streams so that we can trace each call back to the events and behaviors happening at that exact moment.

The Big Picture: Multi-Pi Recording Pipeline

Managing audio capture, timekeeping, backups, and analytics on a single device felt risky and prone to failure. Instead, we spread these tasks across three Raspberry Pis. Here’s a simple diagram of the acoustic monitoring system:

[ Clock Pi ]

(GPS-based Stratum-1 NTP Server)

┌──────────────────────────────┬─────────────────────┐

│ │ │

▼ ▼ ▼

[ Recording Pi ] [ Analytics Pi ] [ IP Cameras ]

┌───────────────────┐ ┌───────────────────┐ ┌─────────────────┐

│ - Sync via NTP │ │ - Sync via NTP │ │ - Sync via NTP │

│ - 8-ch Zoom F8 │ (SSHFS) │ - Rsync to NAS │ │ - Video capture │

│ - ffmpeg segments │────────►│ - Log summaries │ │ - Milestone │

│ - Local USB bkup │ │ - Audio analytics │ └─────────────────┘

└───────────────────┘ └───────────────────┘

▲ (mount) ▲ (NFS)

│ │

[ USB drive ] [ NAS ]

┌───────────────────────────────────────┐

│ - Long-term storage of audio files │

└───────────────────────────────────────┘

What are the roles of the three Pis?

Recording Pi: Uses a Zoom F8 Pro field recorder (8 channels) plugged in via USB. We segment each hour of audio (RF64 WAV) with arecord > ffmpeg and store backups on a USB drive.

Clock Pi: Runs a GPS/PPS HAT to act as a local Stratum-1 NTP server. This helps every device stay on the same accurate clock, minimizing any drift between audio and other data streams.

Analytics Pi: Moves finalized recordings to the NAS, verifies file integrity, and can do quick real-time analytics (like threshold-based event detection). If anything fails, it alerts us or logs the issue.

Essential Equipment and Setup

Central to our approach is the Zoom F8 Pro. It handles eight channels in sync. We have placed eight DPA microphones near some cameras at the AukLab, with protective windshields or netting as needed. The Recording Pi is a Raspberry Pi 5 that communicates with the recorder, using arecord > ffmpeg for large RF64 WAV files. Meanwhile, the Clock Pi’s GPS-based time service keeps everything aligned, and the Analytics Pi manages backups and checks.

Future Machine Learning Steps

From a data-science perspective, having time-synced, multi-channel audio paired with other sensors could reveal patterns we haven’t seen yet. Early stages might involve grouping similar call types and linking them to straightforward behaviors like fish deliveries. Over time, we can catalog a wide variety of calls, see how they cluster, and try to match them to the ringed individuals that make them. Synchronization plus thorough annotation are key.

Looking Ahead to a Digital Cliff

Eventually, we may be able to combine audio, video, thermal data, ID-ring tracking and other types of sensor data to build a 3D representation of the cliff. By using pose estimation from the video streams we could potentially estimate the pose of 3D models of the Guillemots over time and assign sound events to the 3D models. With enough data and labeling effort, we might create a VR-like environment where researchers can virtually visit this unique habitat to watch and hear Guillemots interacting. Nothing will replace the real experience of being there and the field work that is being done at the AukLab, but a virtual model could help us understand the birds’ behavior and ecology in new ways and make it accessible to a much wider audience without disturbing the birds.

We’re not there yet, but we see this as an exciting direction. In a virtual model, researchers or the public could observe how changing conditions (like weather or population density) affect call rates or behaviors. Ultimately, we hope this helps us understand how Guillemots cooperate, adapt, and respond to shifts in their environment.

Who’s Involved

A collaborative team from SLU, SU and RISE underpins this work:

- Jonas Hentati Sundberg (SLU): Marine ornithology, AI for biodiversity

- Henrik Österblom (SLU): Seabird ecology

- John Martinsson (RISE): Machine learning in audio and bioacoustics

- Delia Fano Yela (RISE): Signal processing and source separation

- Olof Mogren (RISE): Deep learning for environmental data

We also plan to share our data and findings with a broader community of machine learning and audio researchers, wherever open datasets make sense.

Wrapping Up

Monitoring a cliff full of Guillemots poses real challenges: the birds can be noisy, and the environment can be rough. By sticking to solid time-stamping, continuous recording, and careful backups, we hope to capture a detailed picture of how these seabirds communicate and interact. This post is just an overview; in our next three posts, we’ll describe how each Pi (Clock, Recording, and Analytics) fits into the overall pipeline. If you have questions or ideas, we’d love to hear from you as we keep refining this setup.

If you’re interested in the technical details, we’ll be sharing more about our hardware choices, software setup, and time synchronization challenges in future posts. Stay tuned for updates on our progress and findings!